I am a post-doctoral fellow in the Sierra team, in Inria Paris. I currently work with Francis Bach on sampling algorithms for diffusion models.

I obtained my PhD in applied mathematics in École polytechnique, while I worked in the Randopt Inria team, located at CMAP. My PhD advisors were Anne Auger and Nikolaus Hansen.

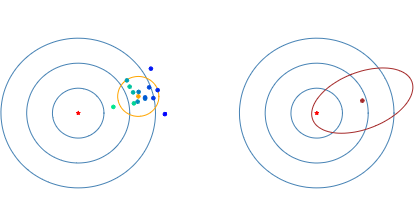

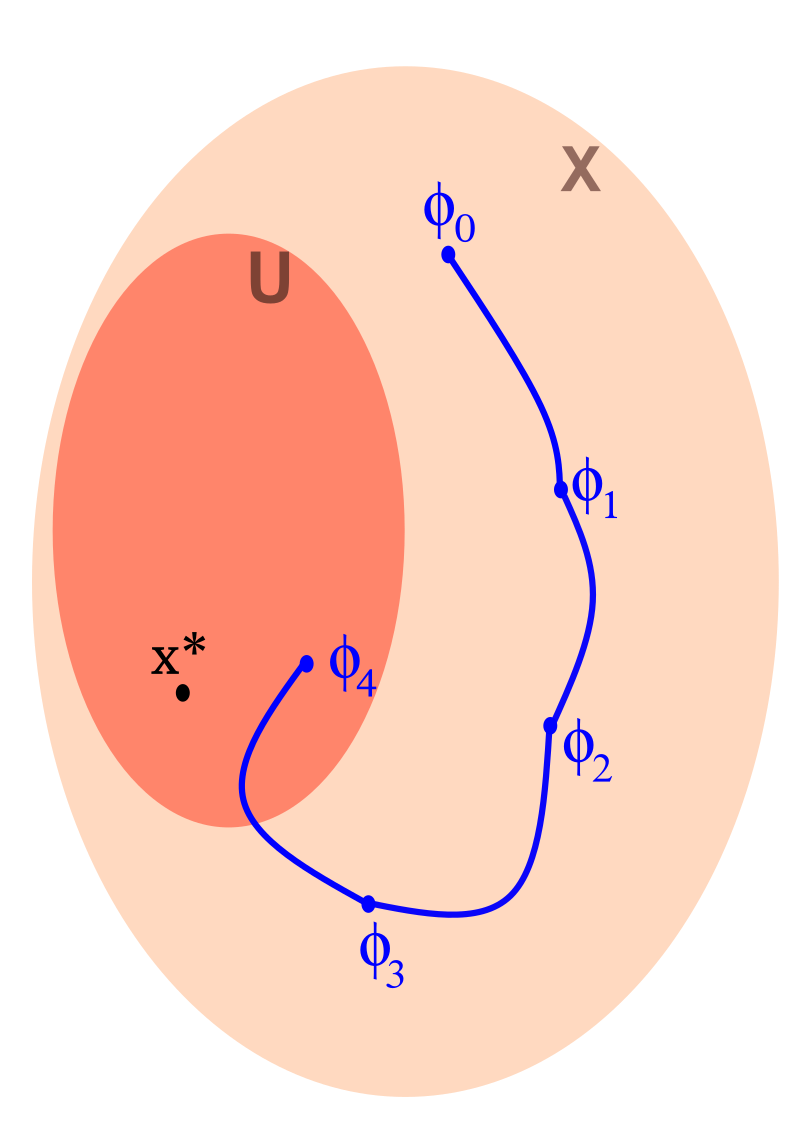

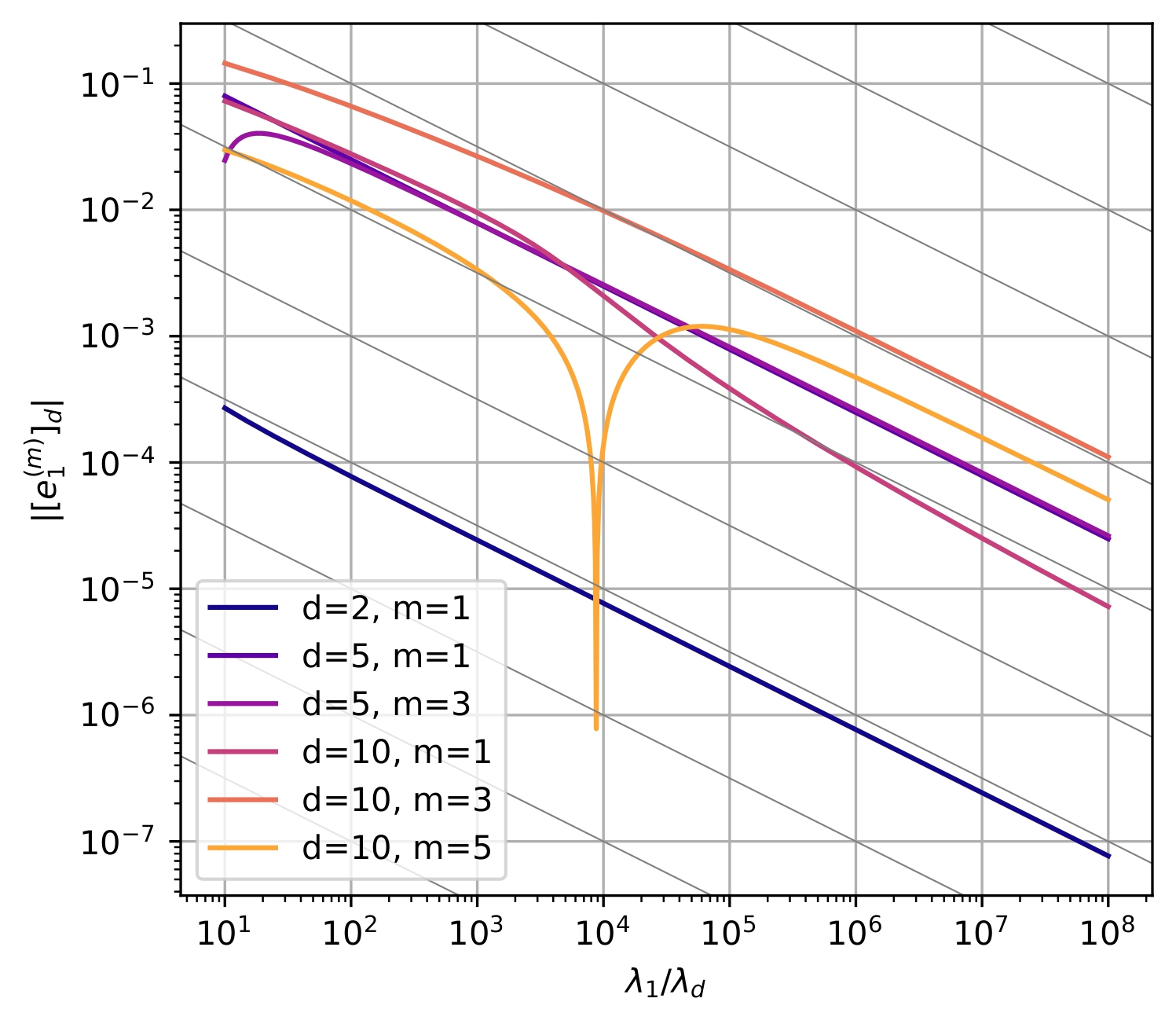

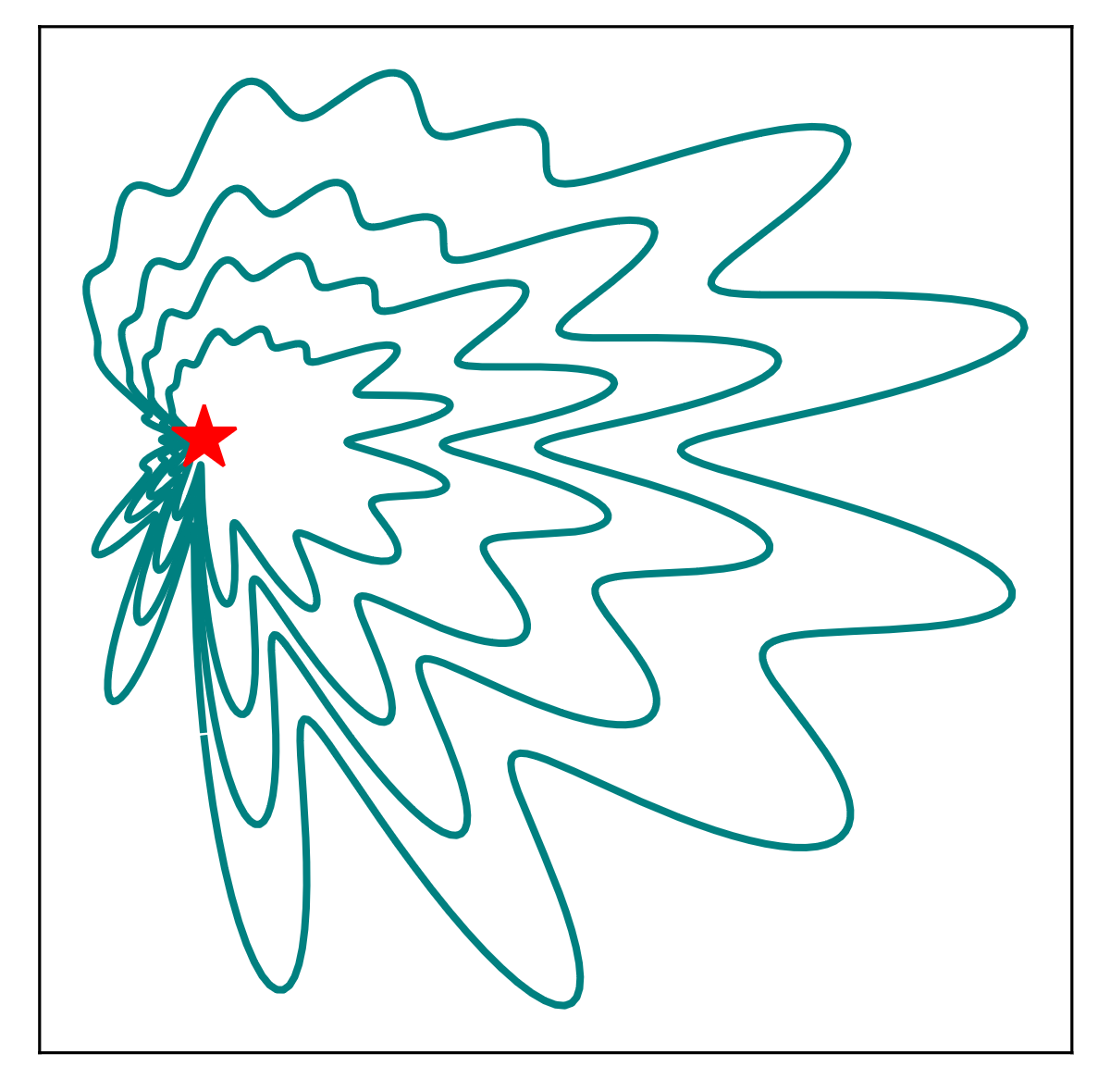

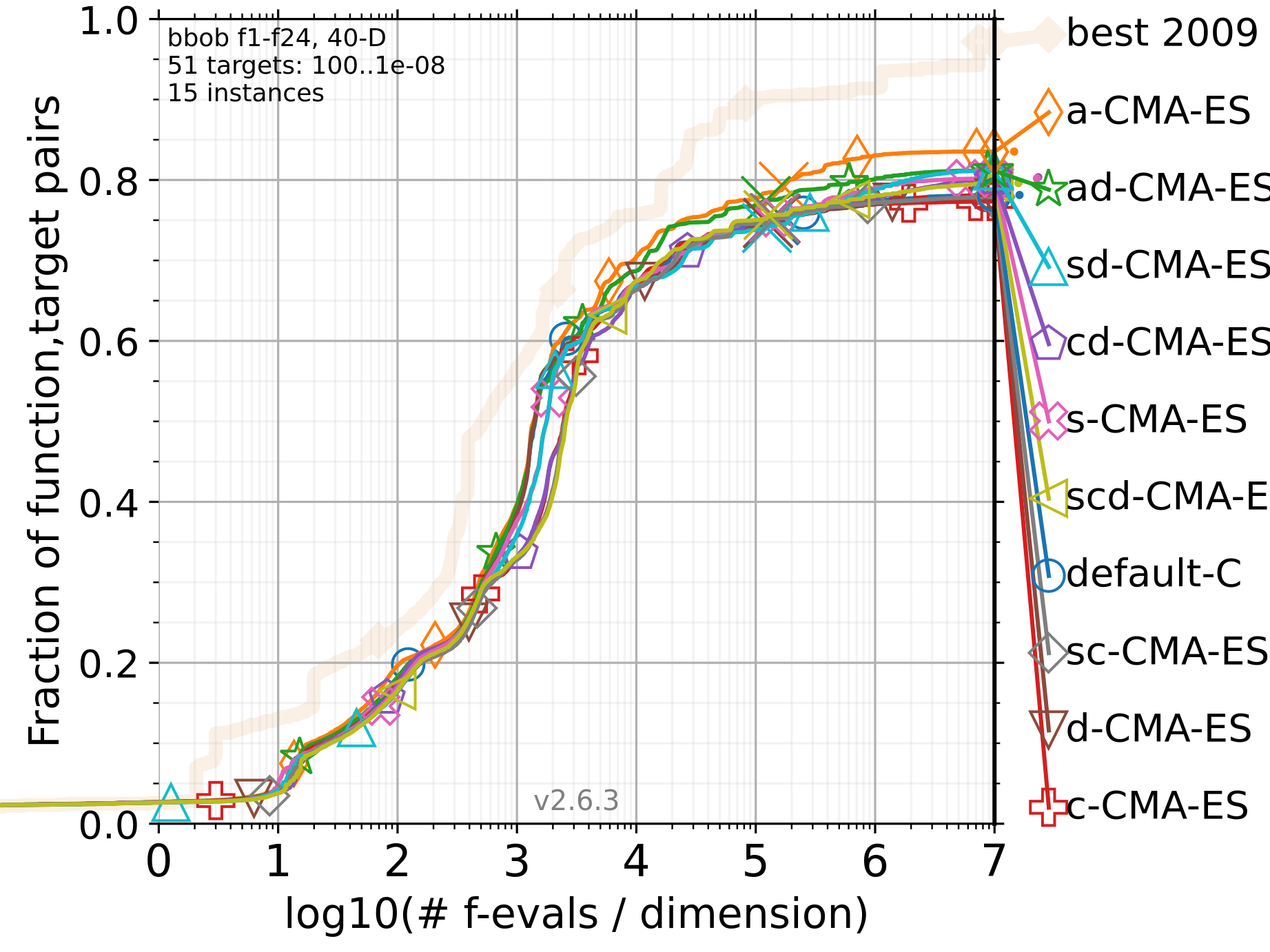

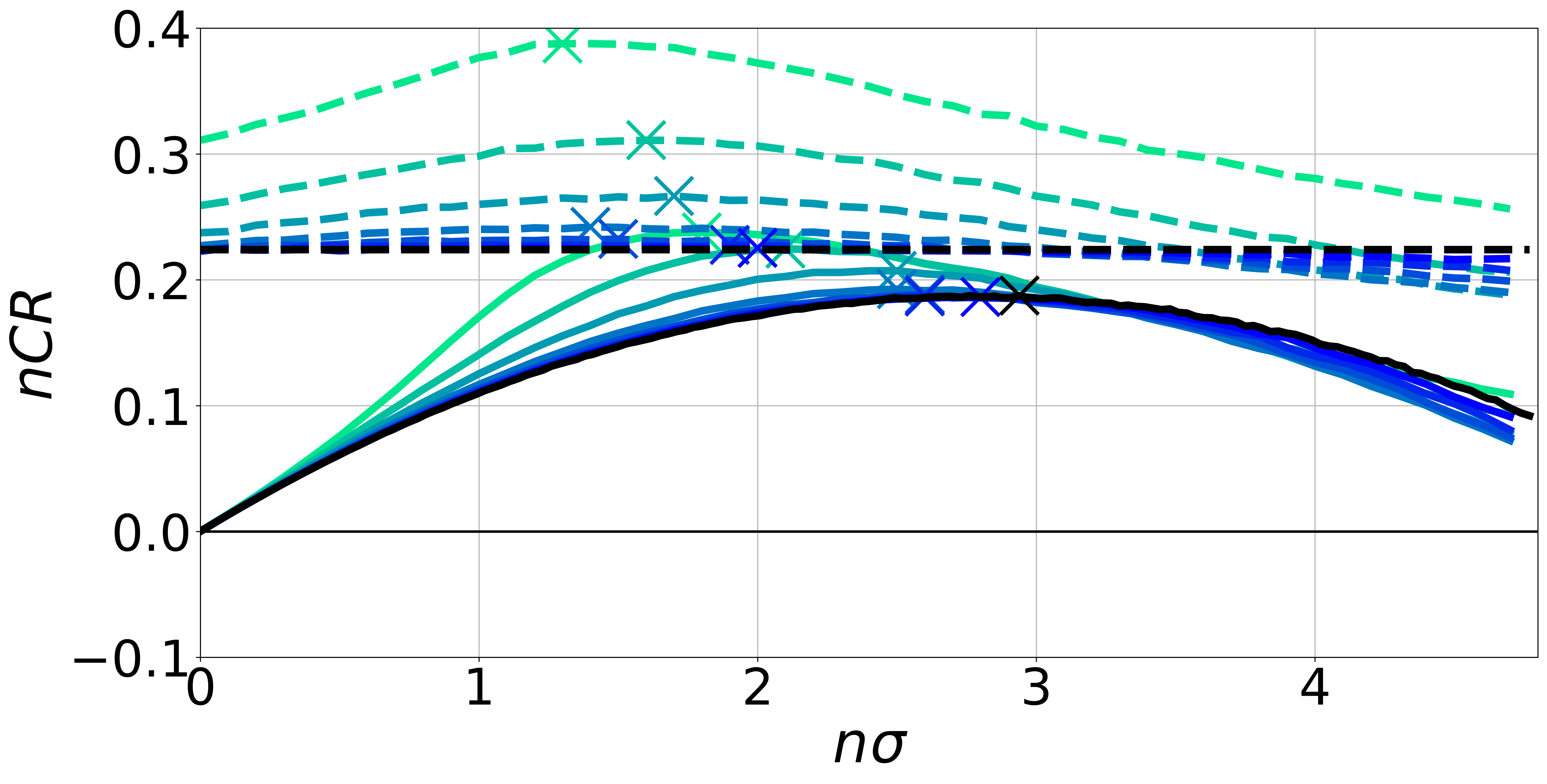

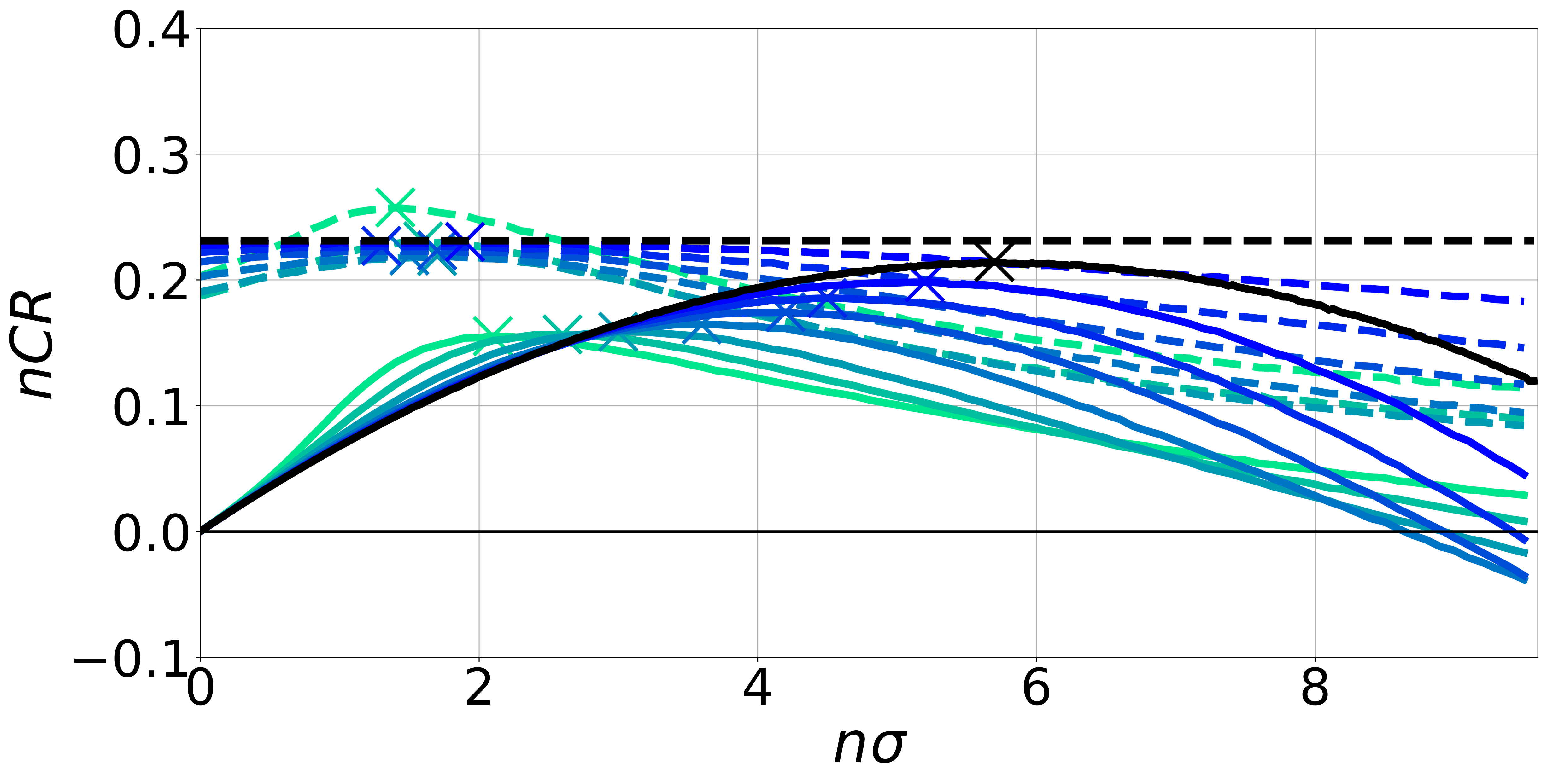

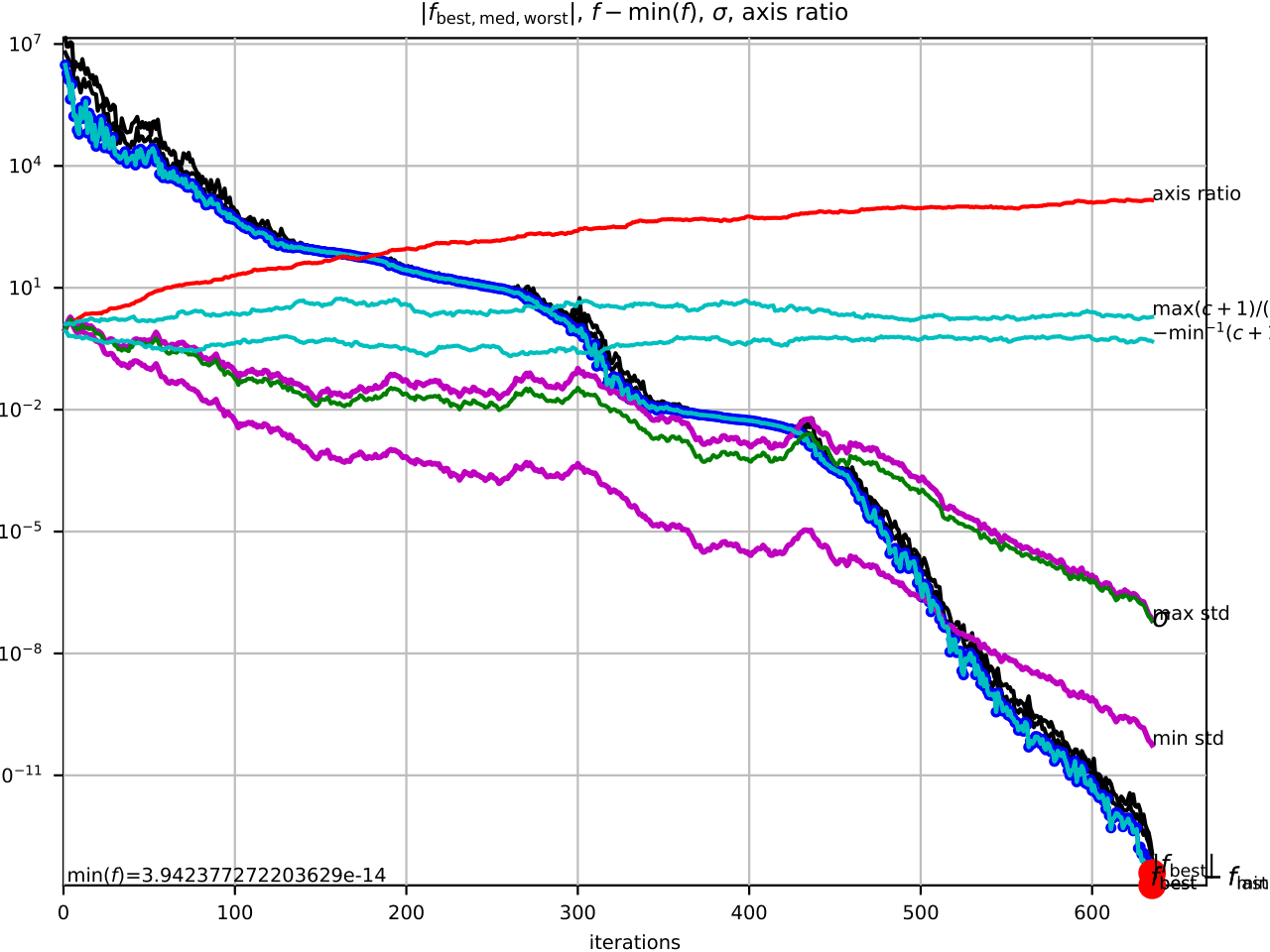

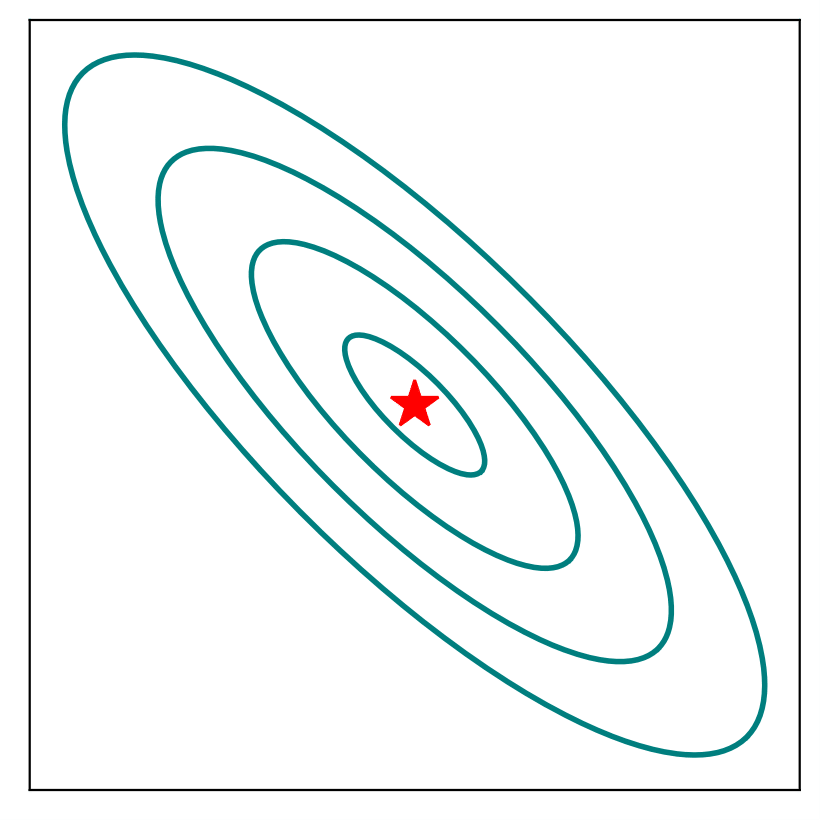

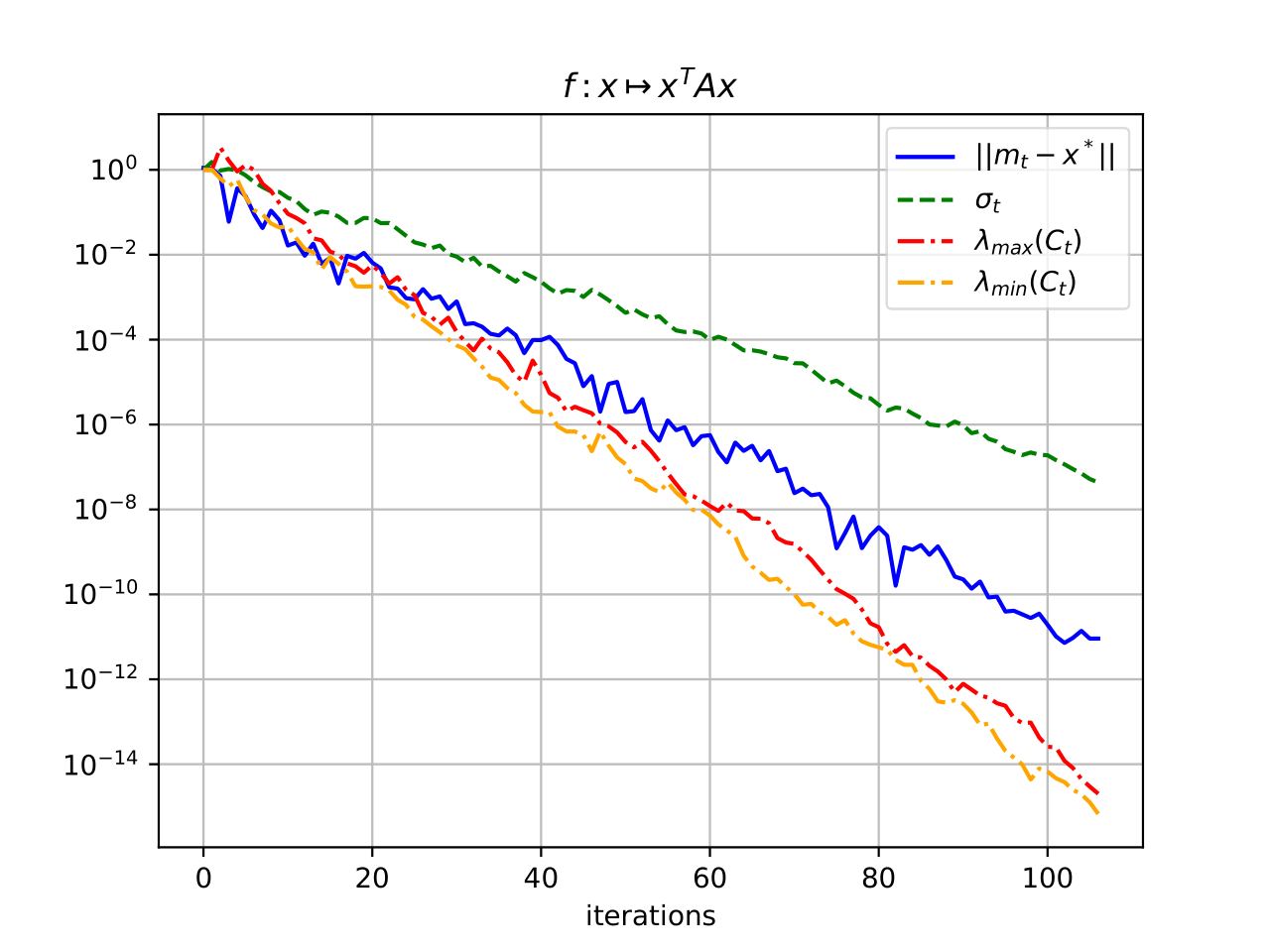

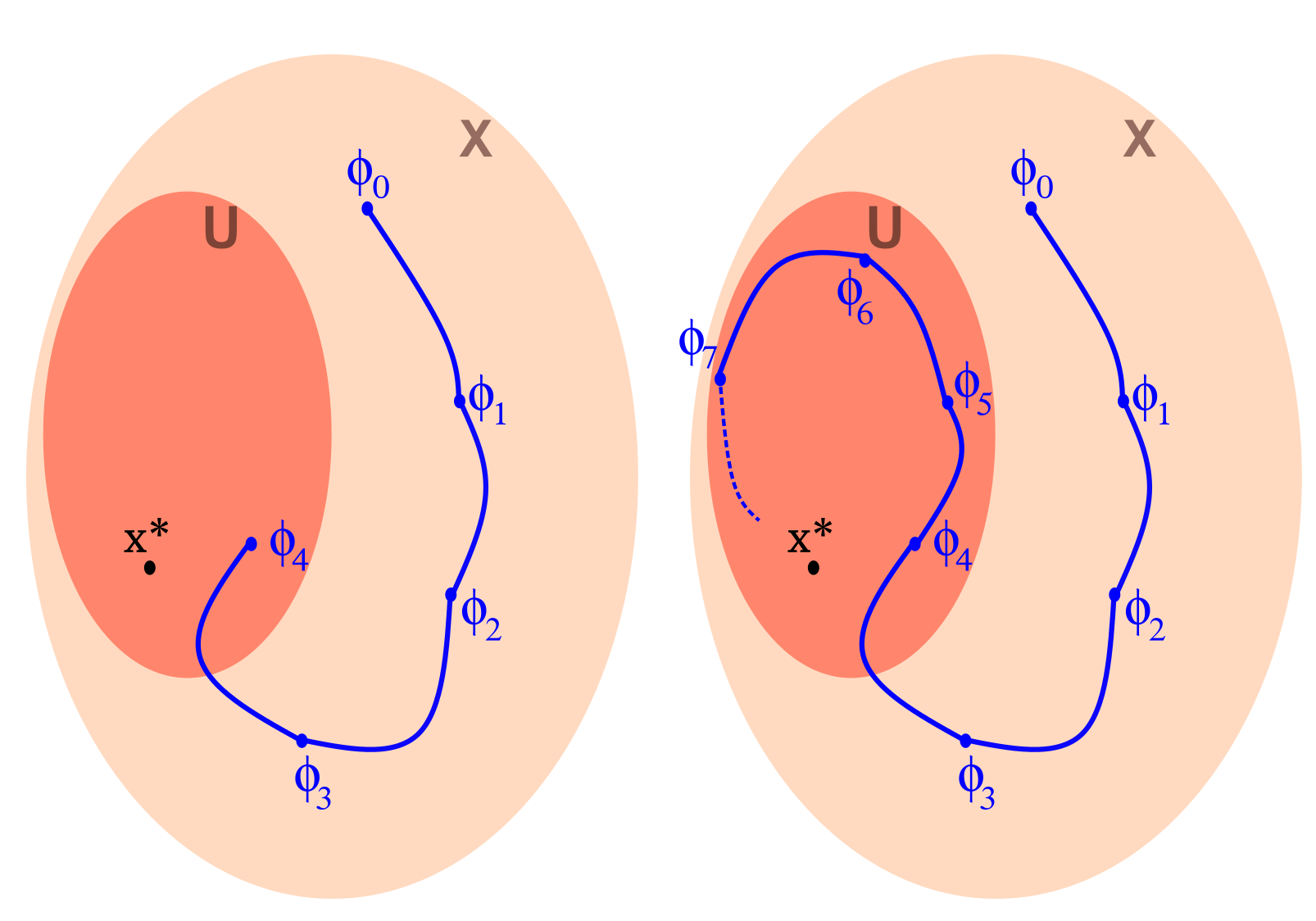

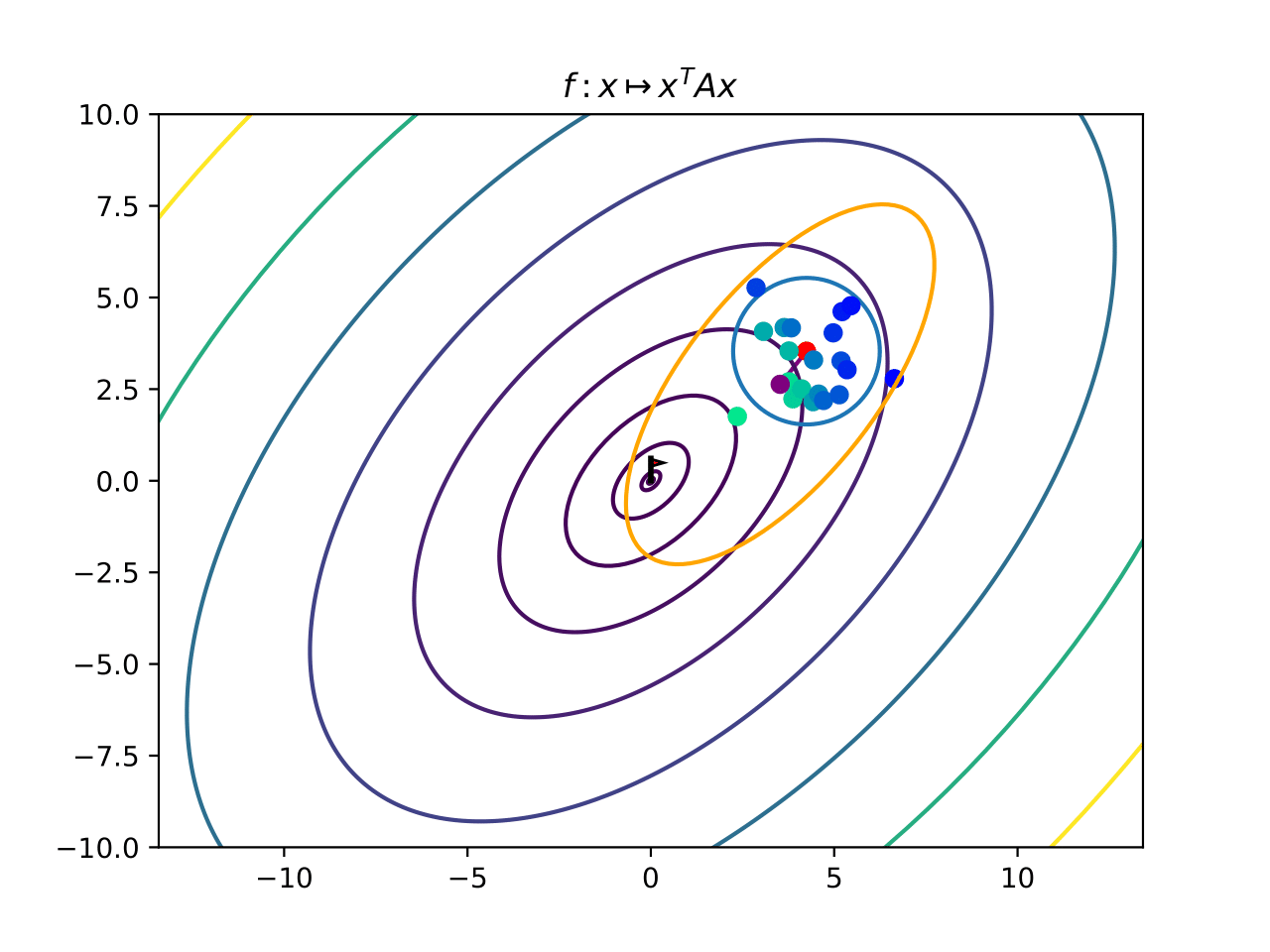

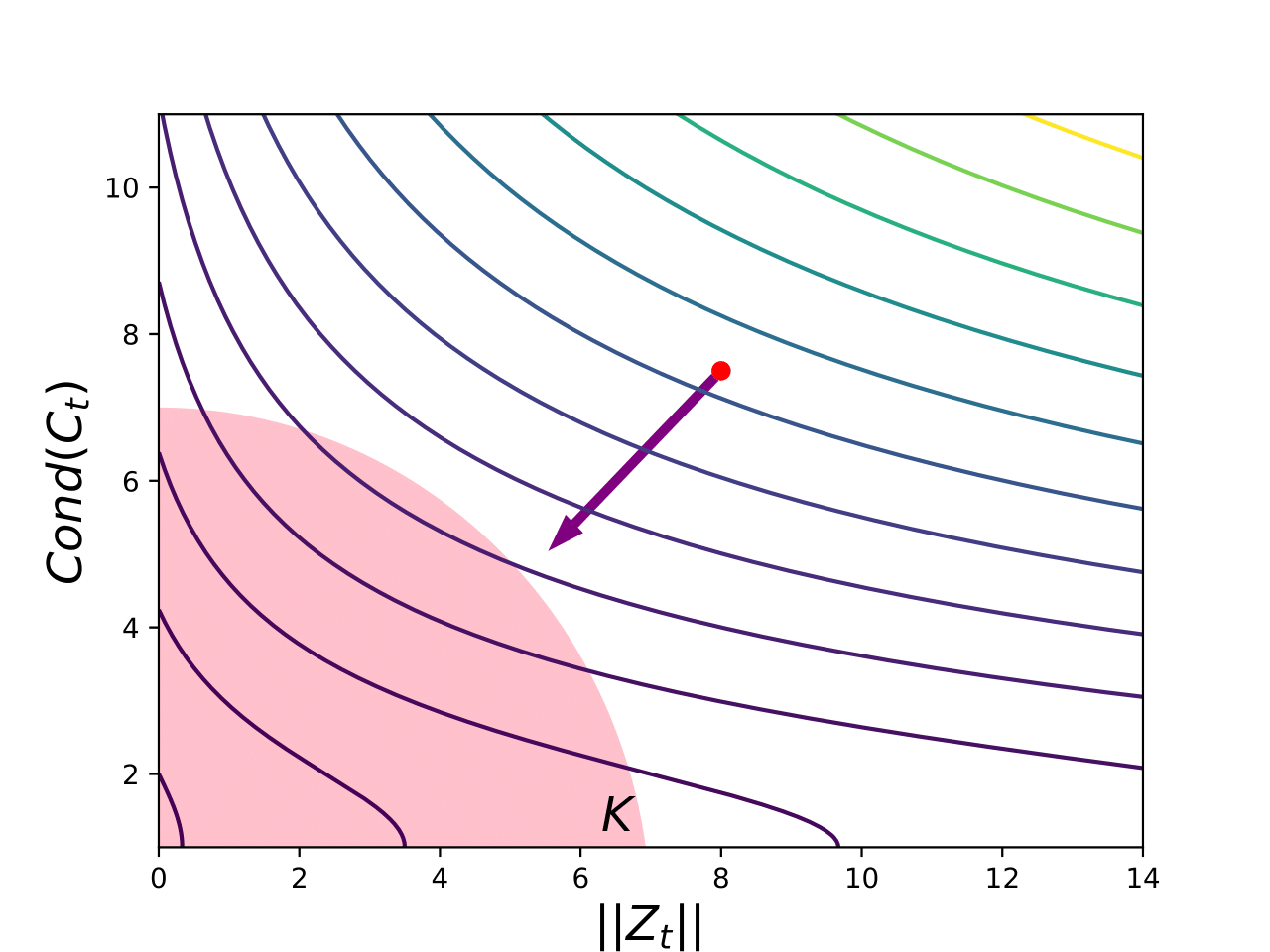

During my PhD, I was working on theoretical aspects of the algorithm CMA-ES (Evolution Strategy with Covariance Matrix Adaptation), specifically on a proof of convergence.